How does it work?

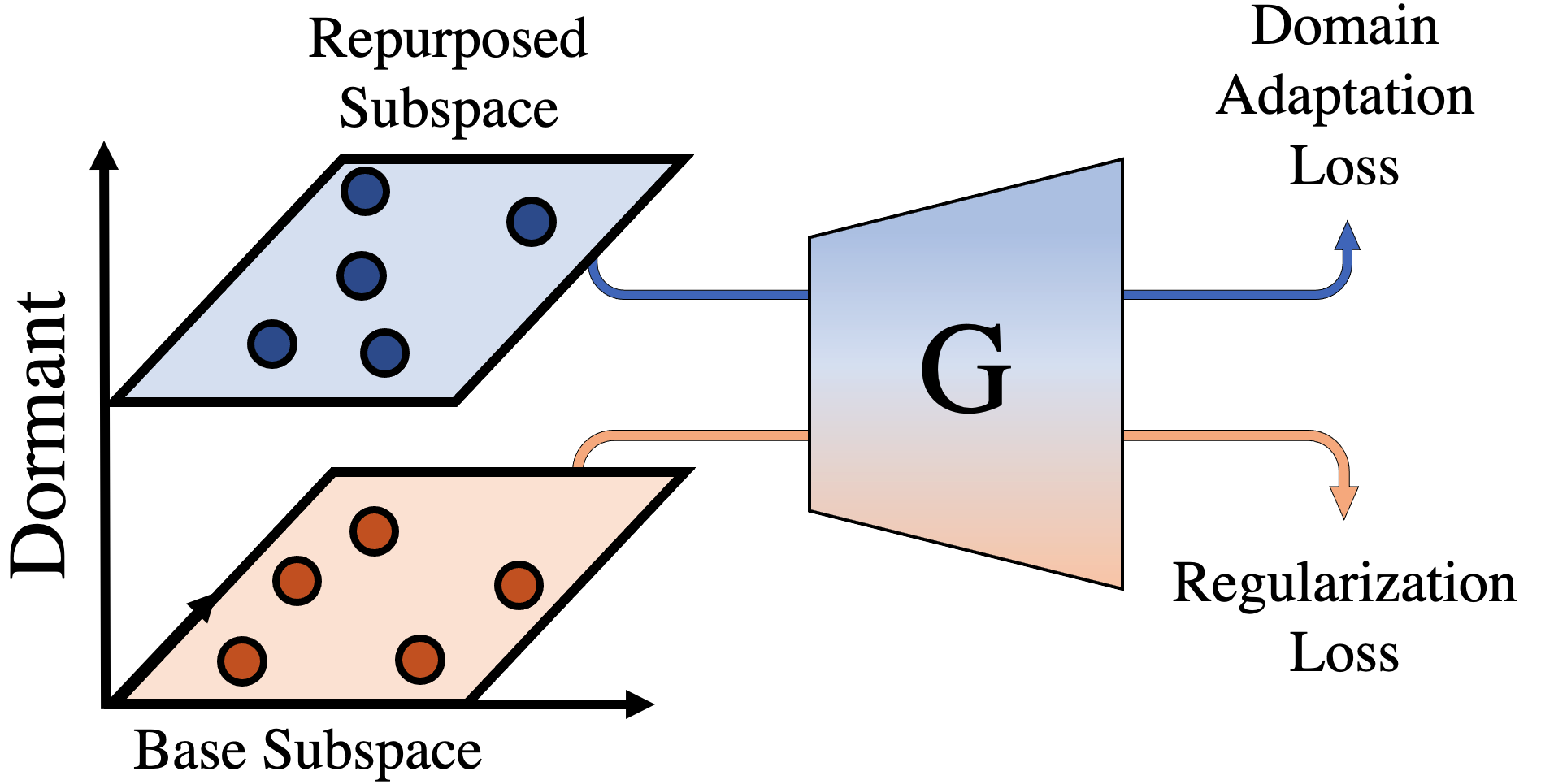

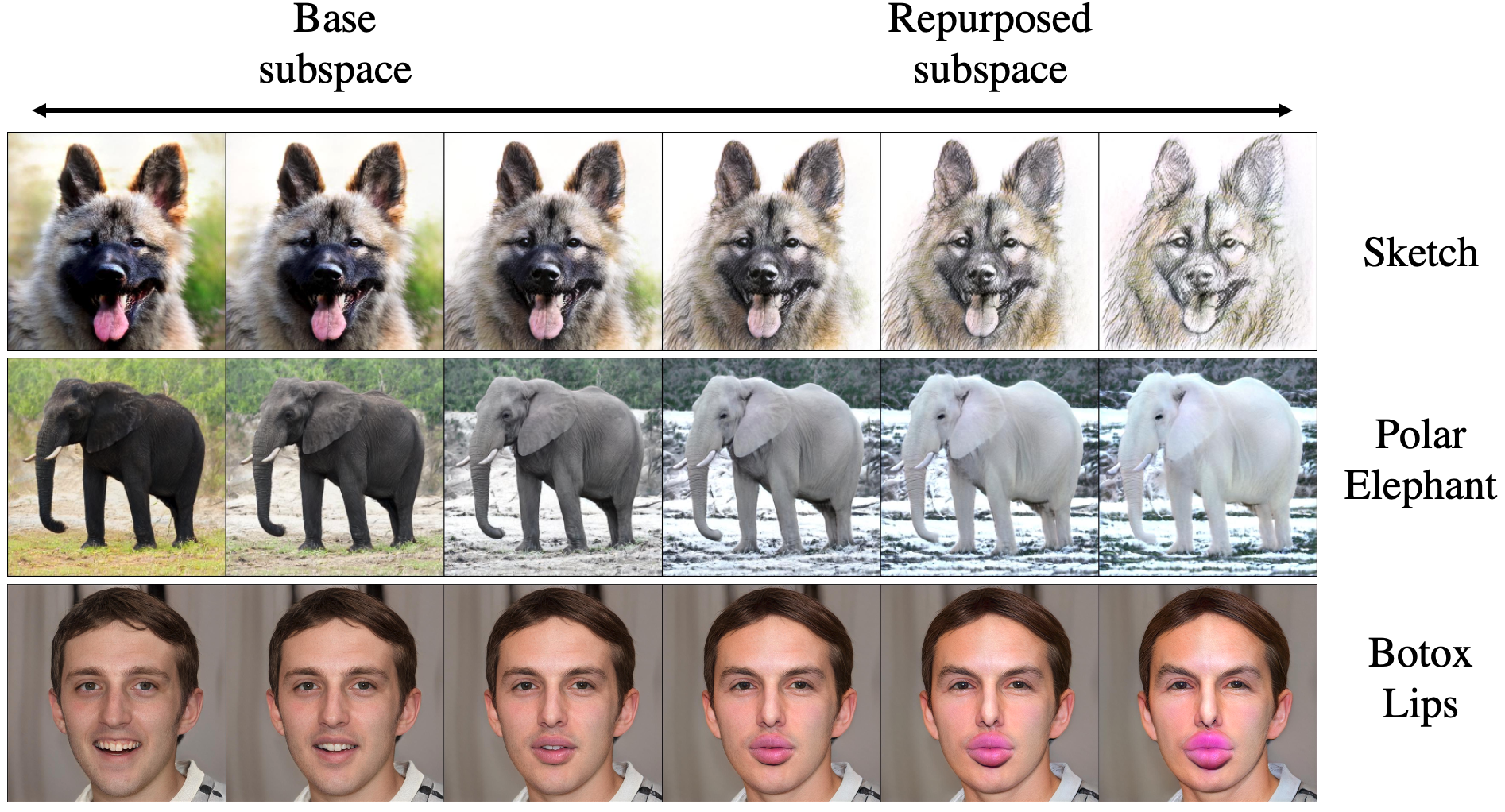

We start by performing an orthogonal decomposition of the model's latent space and identify hundreds of low-magnitude directions which we qualify as dormant. Dormant directions have no effect while other directions are sufficient to represent the original domain. We now pick one dormant direction for every domain we wish to add. We call the space spanned by other directions the "base subspace". For each new domain, we transport the base space along its dormant direction, defining another subspace we call the "repurposed space". To capture the new domain, we apply an off-the-shelf domain adaptation method, modified to operate only on latent codes from the repurposed space. A regularization loss is applied on the base subspace to ensure that the original domain is preserved. Thanks to the subspaces being parallel and the latent space being disentangled, the original factors of variation from the original domain are introduced to the new domains.